AARHUS (Denmark): Facebook’s Oversight Board has announced that users can now submit appeals on content removal to the global body for an independent review.

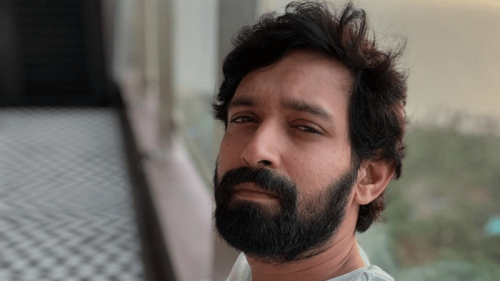

In May, Facebook appointed 20 people from around the world to serve on what will effectively be the social media network’s “Supreme Court” for speech, issuing rulings on what kind of posts will be allowed and what should be taken down. The list features a former prime minister, a Nobel Peace Prize laureate and several constitutional law experts and rights advocates, including the Pakistani lawyer and founder of Digital Rights Foundation (DRF), Nighat Dad.

The Oversight Board is a global body that will make independent decisions on whether specific content should be allowed or removed from Facebook and Instagram.

Facebook can also refer cases for a decision about whether content should remain up or come down from either Facebook or Instagram.

Pakistani among 20 members of social media platform’s Oversight Board which will make decisions on content

“The board is eager to get to work,” said Catalina Botero-Marino, Co-Chair of the Oversight Board, in a statement. “We won’t be able to hear every appeal, but want our decisions to have the widest possible value, and will be prioritising cases that have the potential to impact many users around the world, are of critical importance to public discourse, and raise questions about Facebook’s policies,” she said.

According to a statement, users can submit an eligible case for review through the Oversight Board website, once they have exhausted their content appeals with Facebook. Facebook can also refer cases to the board on an ongoing basis, including in emergency circumstances under the ‘expedited review’ procedure.

“Content that could lead to urgent, real-world consequences will be reviewed as quickly as possible,” said Jamal Greene, Co-Chair of the Oversight Board.

“The board provides a critical independent check on Facebook’s approach to moderating content on the most significant issues, but doesn’t remove the responsibility of Facebook to act first and to act fast in emergencies,” said.

How does the board work?

After selection, cases will be assigned to a five-member panel with at least one member from the region implicated in the content. No single board member makes a decision alone.

“A five-member panel will deliberate over a case of content implicating Pakistan would include at least one board member from Central and South Asia, though this may not necessarily be Nighat Dad,” a board spokesperson had told Dawn in May.

Facebook has long faced criticism for high-profile content moderation issues, including removal of pro-Kashmir posts, hate speech in Myanmar against the Rohingya and other Muslims.

Cases will be decided upon using both Facebook’s community standards and values and international human rights standards. In addition to now accepting cases, the board is able to recommend changes to Facebook’s community standards alongside its decisions.

Each case will have a public comment period to allow third parties to share their insights with the board. Case descriptions will be posted on the board website with a request for public comment before the board begins deliberations. These descriptions will not include any information which could potentially identify the users involved in a case.

“Human rights and freedom of expression will be at the core of every decision we make,” said Botero-Marino. “These cases will have far-reaching, real world consequences. It is our job to ensure we are serving users and holding Facebook accountable.”

The board expects to reach case decisions and Facebook to act on these decisions within a maximum of 90 days.

Published in Dawn, October 23rd, 2020